Normalization and aggregation are two important techniques in data mining and data preprocessing. They play a crucial role in preparing data for analysis and extracting meaningful patterns or insights. Let’s explore each concept:

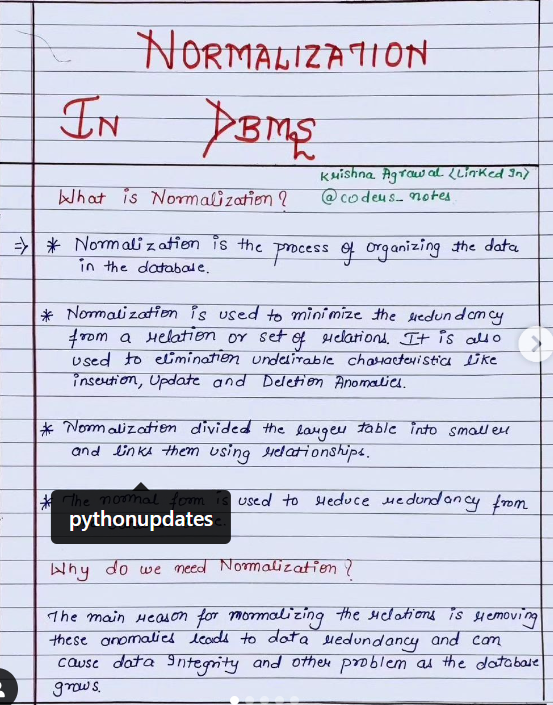

- Normalization:

- Definition: Normalization is the process of scaling and standardizing numerical features of the dataset. The goal is to bring the values of different variables into a similar range. This is particularly important when working with algorithms that are sensitive to the scale of the input features, such as distance-based algorithms (e.g., k-nearest neighbors) or optimization algorithms (e.g., gradient descent).

- Methods of Normalization:

- Min-Max Scaling: Rescales the data to a specific range, typically between 0 and 1.

- Z-score normalization (Standardization): Transforms data to have a mean of 0 and a standard deviation of 1.

- Decimal Scaling: Shifts the decimal point of the values to achieve normalization.

- Log Transformation: Can be applied to handle data with a wide range of values.

- Aggregation:

- Definition: Aggregation involves combining and summarizing data to create a higher-level view. It is particularly useful when dealing with large datasets or when trying to simplify the information for analysis. Aggregation can involve grouping data based on certain attributes and then applying a function to summarize the values within each group.

- Examples of Aggregation Functions:

- Sum: Adds up the values within each group.

- Count: Determines the number of observations in each group.

- Average/Mean: Calculates the average value within each group.

- Max/Min: Finds the maximum or minimum value within each group.

- Standard Deviation/Variance: Measures the spread of values within each group.

- Grouping: Aggregation often involves grouping data based on one or more attributes. For example, you might aggregate sales data by product category or by time periods.

Why are they important in data mining?

- Improved Model Performance: Normalization helps algorithms converge faster and perform better by ensuring that all features contribute equally.

- Reduced Sensitivity to Outliers: Normalization can mitigate the impact of outliers by bringing all values to a similar scale.

- Simplification of Data: Aggregation simplifies large datasets, making it easier to identify patterns and trends.

- Efficient Analysis: Aggregated data is often more manageable and can lead to more efficient data mining processes.

In summary, normalization and aggregation are essential steps in the data preprocessing phase of data mining, contributing to more effective analysis and model building.

One thought on “Data Normalization and Aggregation”